|

| Zpool status and zfs list (minus all the VM LUN's/shares) |

Performance

So just so we are all on the same page, this is a home SAN used by a handful of desktops, laptops, and servers (some of which are running a few VM's). Overall performance requirements are not onerous, and certainly nowhere near an enterprise. That said, the little guy can run.Various iozone and bonnie benchmarks run locally lead me to the belief the 2-mirror vdev 4-disk pool 'home-0' is capable of some 500 MB/s sequential large-block throughput performance best-case, and has a random 8K write IOPS potential of around 250, and a random 8K read IOPS potential of around 500. Which is in keeping with what one may expect from a pool of this configuration. The throughput is more than sufficient to overload the single Gbit NIC port, and in fact could overload a few more were I inclined to throw in a card. I'll paste one iozone output - this was write/rewrite & read/re-read on 1 GB file x 4 threads @ 128K with O_DIRECT on (even still, reads are through ARC, clearly).

File size set to 1048576 KB

Record Size 128 KB

O_DIRECT feature enabled

Command line used: iozone -i 0 -i 1 -t 4 -s 1G -r 128k -I

Output is in Kbytes/sec

Time Resolution = 0.000001 seconds.

Processor cache size set to 1024 Kbytes.

Processor cache line size set to 32 bytes.

File stride size set to 17 * record size.

Throughput test with 4 processes

Each process writes a 1048576 Kbyte file in 128 Kbyte records

Children see throughput for 4 initial writers = 4013508.50 KB/sec

Parent sees throughput for 4 initial writers = 133536.91 KB/sec

Min throughput per process = 942170.62 KB/sec

Max throughput per process = 1084839.00 KB/sec

Avg throughput per process = 1003377.12 KB/sec

Min xfer = 910720.00 KB

Children see throughput for 4 rewriters = 4426626.19 KB/sec

Parent sees throughput for 4 rewriters = 187908.93 KB/sec

Min throughput per process = 1016124.94 KB/sec

Max throughput per process = 1168724.50 KB/sec

Avg throughput per process = 1106656.55 KB/sec

Min xfer = 913792.00 KB

Children see throughput for 4 readers = 9211610.25 KB/sec

Parent sees throughput for 4 readers = 9182744.11 KB/sec

Min throughput per process = 2298095.25 KB/sec

Max throughput per process = 2309781.00 KB/sec

Avg throughput per process = 2302902.56 KB/sec

Min xfer = 1043328.00 KB

Children see throughput for 4 re-readers = 11549440.75 KB/sec

Parent sees throughput for 4 re-readers = 11489037.68 KB/sec

Min throughput per process = 2084150.50 KB/sec

Max throughput per process = 3724946.00 KB/sec

Avg throughput per process = 2887360.19 KB/sec

Min xfer = 590848.00 KB

To achieve this, I have the following minimal tunables for ZFS in my loader.conf:

### ZFS

# still debating best settings here - very dependent on type of disk used

# in ZFS, also scary when you don't have homogeneous disks in a pool -

# fortunately I do at home. What you put here, like on Solaris,

# in ZFS, also scary when you don't have homogeneous disks in a pool -

# fortunately I do at home. What you put here, like on Solaris,

# will have an impact on throughput vs latency - lower = better latency,

# higher = more throughput but worse latency - 4/8 seem like a decent

# middle ground for 7200 RPM devices with NCQ

# higher = more throughput but worse latency - 4/8 seem like a decent

# middle ground for 7200 RPM devices with NCQ

vfs.zfs.vdev.min_pending=4

vfs.zfs.vdev.max_pending=8

# 5/1 is too low, and 30/5 is too high - best wisdom at the moment for a

# good default is 10/5

# good default is 10/5

vfs.zfs.txg.timeout="10"

vfs.zfs.txg.synctime_ms="5000"

# it is the rare workload that benefits from this more than it hurts

vfs.zfs.prefetch_disable="1"

iSCSI

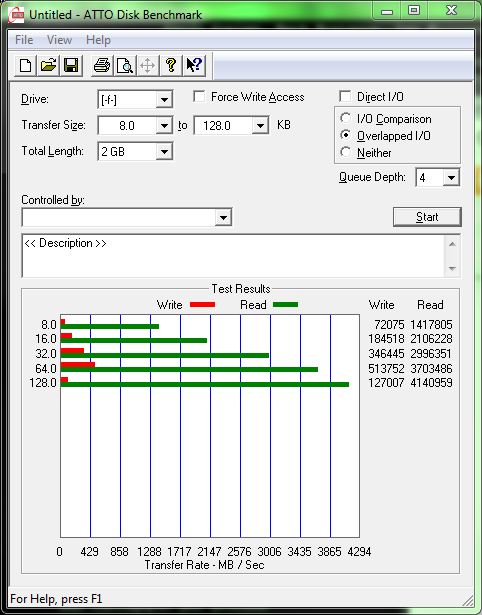

What's more important is how it performs to clients. My main box is (gasp) a Windows 7 desktop.. I ran around a bit and found 'ATTO', which I recall a customer once using to benchmark disks on Windows. I can't speak to its 'goodness', but it returned results I'd expect with Direct I/O checked on or off.I'm only really interested in the transfer sizes I ever expect to use, so 8K to 128K, and I maxxed out the 'length' ATTO could go to, 2 GB, which I might add is still well within the range of my ARC, but Direct I/O checkbox in ATTO works quite well and seems to force real reads. As you can see in the first picture, the iSCSI disk (F:) performs quite admirably considering this is between two standard Realtek NIC's on desktop motherboards going through a sub-$200 home 1 Gbit switch, all running at standard 1500 MTU.

|

| 8 KB to 128 KB - 2 GB file - Direct I/O - 4 queue |

For giggles, in the next picture you'll see the same test performed with the Direct I/O checkbox removed. Look at me go! 4.1 GB/s read performance - across a 1 Gbit link! Obviously a little broken. ATTO seemed to give up on the 128 K (I did a test for the full xfer sizes up to 8 M, and it completely crapped out on the 8M xfer size one, too, reporting nothing for read performance).

For iSCSI, I opted for the istgt daemon, not the older offering. My istgt.conf file is below in its uncommented entirety. Note that my NodeBase and TargetName are being set the way they are to mimic the settings from the old SAN, thereby removing my need to touch the iSCSI setup on my machines. I've left out most the LUN's, but left two to show how they are defined in this file:

[Global]

Comment "Global section"

NodeBase "iqn.1986-03.com.sun:02"

PidFile /var/run/istgt.pid

AuthFile /usr/local/etc/istgt/auth.conf

LogFacility "local7"

Timeout 30

NopInInterval 20

DiscoveryAuthMethod Auto

MaxSessions 32

MaxConnections 8

MaxR2T 32

MaxOutstandingR2T 16

DefaultTime2Wait 2

DefaultTime2Retain 60

FirstBurstLength 262144

MaxBurstLength 1048576

MaxRecvDataSegmentLength 262144

InitialR2T Yes

ImmediateData Yes

DataPDUInOrder Yes

DataSequenceInOrder Yes

ErrorRecoveryLevel 0

[UnitControl]

Comment "Internal Logical Unit Controller"

AuthMethod CHAP Mutual

AuthGroup AuthGroup10000

Portal UC1 127.0.0.1:3261

Netmask 127.0.0.1

[PortalGroup1]

Comment "T1 portal"

Portal DA1 192.168.2.15:3260

[InitiatorGroup1]

Comment "Initiator Group1"

InitiatorName "ALL"

Netmask 192.168.2.0/24

[LogicalUnit1]

TargetName iqn.1986-03.com.sun:02:homesan

Mapping PortalGroup1 InitiatorGroup1

AuthMethod Auto

AuthGroup AuthGroup1

UnitType Disk

LUN0 Storage /dev/zvol/home-0/ag_disk1 Auto

LUN1 Storage /dev/zvol/home-0/steam_games Auto

Part of reaching this level of performance with CIFS to a FreeBSD Samba install was in the tuning, however. I went with samba version 3.6, and my smb.conf entries for ZFS/AIO can be found below. Please bear in mind I use CIFS at the home for unimportant data that is snapshotted regularly and I can afford to lose a few minutes of work - and am impatient and don't want to wait forever for a file copy on it, etc, and at the moment the home SAN has no SSD for slog. These settings are very likely not data safe for in-transit data if power is lost (mostly due to aio write behind = yes):

|

| 8 KB to 128 KB - 2 GB file - no Direct I/O - 4 queue |

[Global]

Comment "Global section"

NodeBase "iqn.1986-03.com.sun:02"

PidFile /var/run/istgt.pid

AuthFile /usr/local/etc/istgt/auth.conf

LogFacility "local7"

Timeout 30

NopInInterval 20

DiscoveryAuthMethod Auto

MaxSessions 32

MaxConnections 8

MaxR2T 32

MaxOutstandingR2T 16

DefaultTime2Wait 2

DefaultTime2Retain 60

FirstBurstLength 262144

MaxBurstLength 1048576

MaxRecvDataSegmentLength 262144

InitialR2T Yes

ImmediateData Yes

DataPDUInOrder Yes

DataSequenceInOrder Yes

ErrorRecoveryLevel 0

[UnitControl]

Comment "Internal Logical Unit Controller"

AuthMethod CHAP Mutual

AuthGroup AuthGroup10000

Portal UC1 127.0.0.1:3261

Netmask 127.0.0.1

[PortalGroup1]

Comment "T1 portal"

Portal DA1 192.168.2.15:3260

[InitiatorGroup1]

Comment "Initiator Group1"

InitiatorName "ALL"

Netmask 192.168.2.0/24

[LogicalUnit1]

TargetName iqn.1986-03.com.sun:02:homesan

Mapping PortalGroup1 InitiatorGroup1

AuthMethod Auto

AuthGroup AuthGroup1

UnitType Disk

LUN0 Storage /dev/zvol/home-0/ag_disk1 Auto

LUN1 Storage /dev/zvol/home-0/steam_games Auto

CIFS / Samba

Part of reaching this level of performance with CIFS to a FreeBSD Samba install was in the tuning, however. I went with samba version 3.6, and my smb.conf entries for ZFS/AIO can be found below. Please bear in mind I use CIFS at the home for unimportant data that is snapshotted regularly and I can afford to lose a few minutes of work - and am impatient and don't want to wait forever for a file copy on it, etc, and at the moment the home SAN has no SSD for slog. These settings are very likely not data safe for in-transit data if power is lost (mostly due to aio write behind = yes):

[global]

...

socket options = IPTOS_LOWDELAY TCP_NODELAY SO_RCVBUF=131072 SO_SNDBUF=131072

...

socket options = IPTOS_LOWDELAY TCP_NODELAY SO_RCVBUF=131072 SO_SNDBUF=131072

use sendfile = no

min receivefile size = 16384

aio read size = 16384

aio write size = 16384

aio write behind = yes

...

...

I also have the following related changes to my /boot/loader.conf file:

aio_load="YES"

net.inet.tcp.sendbuf_max=16777216

net.inet.tcp.recvbuf_max=16777216

net.inet.tcp.sendspace=65536

net.inet.tcp.recvspace=131072

And to my /etc/rc.conf file:

smbd_enable="YES"

nmbd_enable="YES"

FreeBSD

In all, I'm impressed on the whole, but finding a few things lacking. One example, while it is of no consequence to me, would be as others have pointed out to me that if I had been doing FC Target stuff on my Solaris SAN, I'd have been unable to try this out - FreeBSD lacking good FC Target capability like COMSTAR has. The istgt package seems to do iSCSI great, but it is limited to iSCSI.There were also various personal learning curves. For awhile, on boot the box would be unable to initially do istgt, and it turned out that while I had zfs_load="YES" in my /boot/loader.conf file, I did not have zfs_enable="YES" in my /etc/rc.conf file. This is sneaky, because ZFS worked just fine (I could type 'zfs list' right after boot, and there were my datasets), but even after doing a zfs list, istgt wouldn't work until I created or renamed a zvol. It turned out FreeBSD wasn't creating the /dev/zvol links that istgt was looking for until I created or renamed a zvol, whereas once I added zfs_enable="YES" to /etc/rc.conf, they were instantiated at boot time just fine.

I find the ports system both flexible, powerful, and foreign. I'm probably pulling a FreeBSD admin faux pas by installing the new 'pkg' system but both before and after installing it have randomly used 'make install' in /usr/ports when necessary (like to compile Samba 3.6 with the options I wanted).

I do enjoy learning new things, though, so I'm quite pleased with the time spent and the results so far. My next steps will be:

- creating a robust automatic snapshot script/daemon

- seeing if I can't dust off my old Python curses skills and build a simple one screen health monitor with various SAN-specific highlights to leave running on the LCD monitor plugged into the box

- going downstairs right now and finding the Crown and a Coke - pictures on other post can wait

Ciao!

The Best Project Centers for Vlsi and Pyhton, Thank you!!!

ReplyDeletePython Projects in Chennai

Python Project Centers in Chennai

vlsi mini project centers in chennai

vlsi projects in chennai

A Chatbot Development is a product program for reproducing wise discussions with human utilizing rules or man-made brainpower. Clients connect with the Chatbot development service by means of conversational interface through composed or spoken content. Chatbots can live in informing stages like Slack, Facebook Messenger bot developer and Telegram and fill some needs – requesting items, thinking about climate and dealing with your fund in addition to other things. As a Chatbot development company advancement organization our competency let you find happiness in the hereafter by taking care of clients all the more intelligently to accomplish wanted outcome. As a Chatbot companies we can streamline a large portion of your dreary undertakings, for example, voice bot advancement and client service, online business advices and so on.

ReplyDeletewe at SynergisticIT offer the best aws bootcamp

ReplyDeleteThe Angular Training covers a wide range of topics including Components, Angular Directives, Angular Services, Pipes, security fundamentals, Routing, and Angular programmability. The new Angular TRaining will lay the foundation you need to specialise in Single Page Application developer. Angular Training

ReplyDeleteابی

معین

I'm hoping you have some insight for me. I very sincerely appreciate your time. Perhaps this topic may be a good one to blog about. I imagine I'm not the only one trying to figure this out. Plus, with the fires in Northern California, seems like a lot of guys are getting back into construction after having let their licenses expire.

ReplyDeletethetechiefinds.com

thetechmagazines.com

thetechiefind.com

thetechtrending.com

convert json to one line

ReplyDeletejson to csv

Thank you for this post. Good luck.

ReplyDeleteCall Center Software in dubai

ReplyDeleteblog is nice and much interesting which engaged me more.Spend a worthful time.keep updating more.

python internship | web development internship |internship for mechanical engineering students |mechanical engineering internships |java training in chennai |internship for 1st year engineering students |online internships for cse students |online internship for engineering students |internship for ece students |data science internships

Such an informative article it is, we are glad to read your blog, and we totally agree with you, the list you shared here is excellent. Keep sharing such articles.

ReplyDeleteXpress Cargo Packers and Movers In kirtinagar, delhi